Kubernetes Overview

With the widespread adoption of containers among organizations, Kubernetes, the container-centric management software, has become a standard to deploy and operate containerized applications and is one of the most important parts of DevOps.

Originally developed at Google and released as open-source in 2014. Kubernetes builds on 15 years of running Google's containerized workloads and the valuable contributions from the open-source community. Inspired by Google’s internal cluster management system, Borg,

What is Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

What are the benefits of using k8s?

Kubernetes (k8s) is an open-source container orchestration platform that is widely used for managing and deploying containerized applications. Some of the benefits of using k8s include:

Scalability: Kubernetes makes it easy to scale your application horizontally or vertically, allowing you to add more resources to meet demand or remove resources when they are no longer needed.

High availability: Kubernetes has built-in features for ensuring high availability, such as automatic container restarts, rolling updates, and automatic failover.

Resource utilization: Kubernetes allows you to maximize the use of your infrastructure resources by scheduling containers onto nodes based on available resources.

Self-healing: Kubernetes has a self-healing feature that ensures containers are always running as expected. If a container fails, Kubernetes can automatically restart it or replace it with a new instance.

Automation: Kubernetes allows you to automate deployment, scaling, and management tasks, which can save time and reduce the risk of human error.

Portability: Kubernetes supports multiple container runtimes and can run on a variety of platforms, including public clouds, private clouds, and on-premises data centers.

Community support: Kubernetes has a large and active community that provides support, documentation, and a wide range of third-party tools and plugins.

Overall, Kubernetes provides a powerful and flexible platform for managing containerized applications at scale, with a wide range of benefits that can help organizations improve efficiency, reliability, and agility.

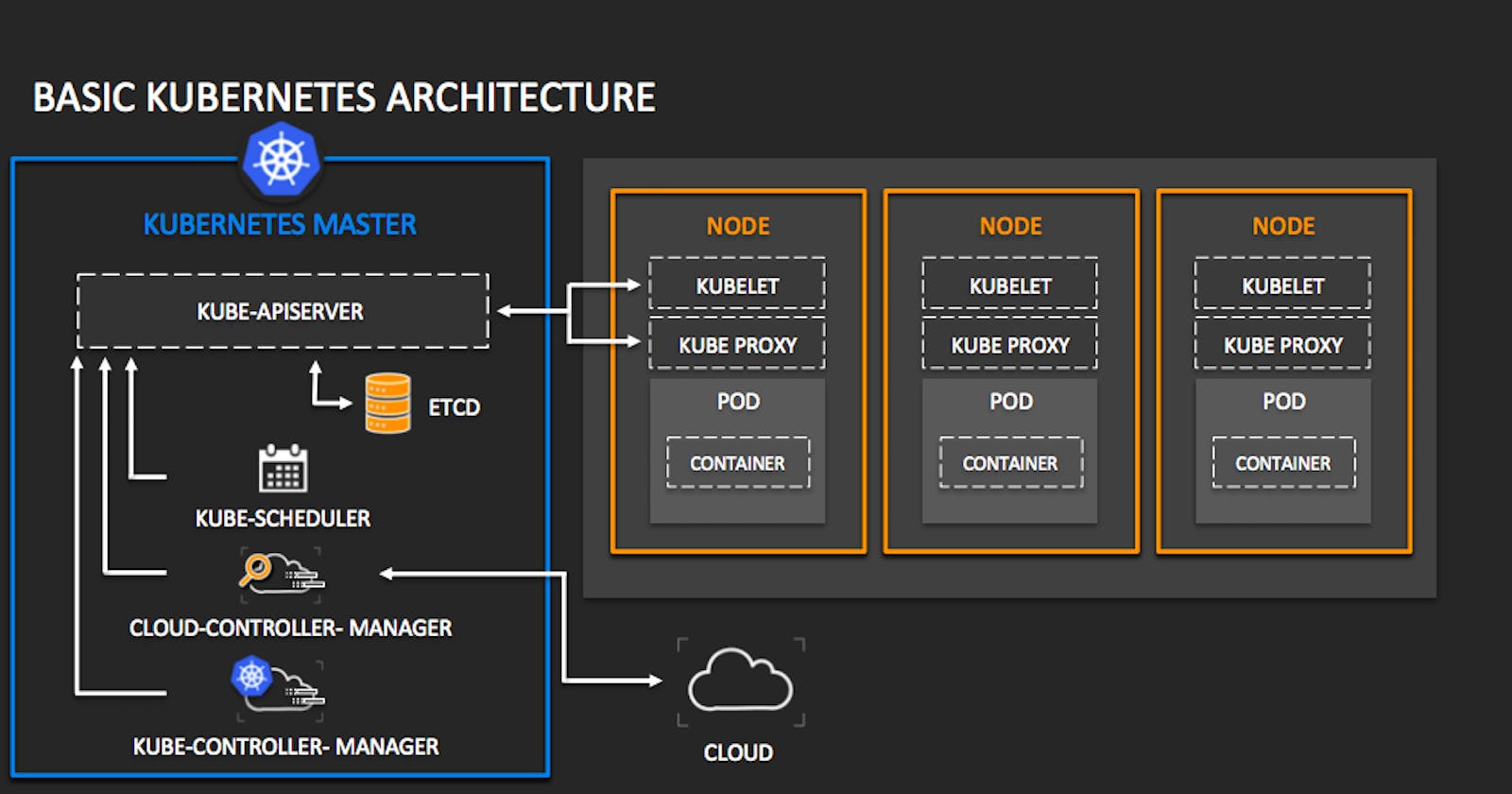

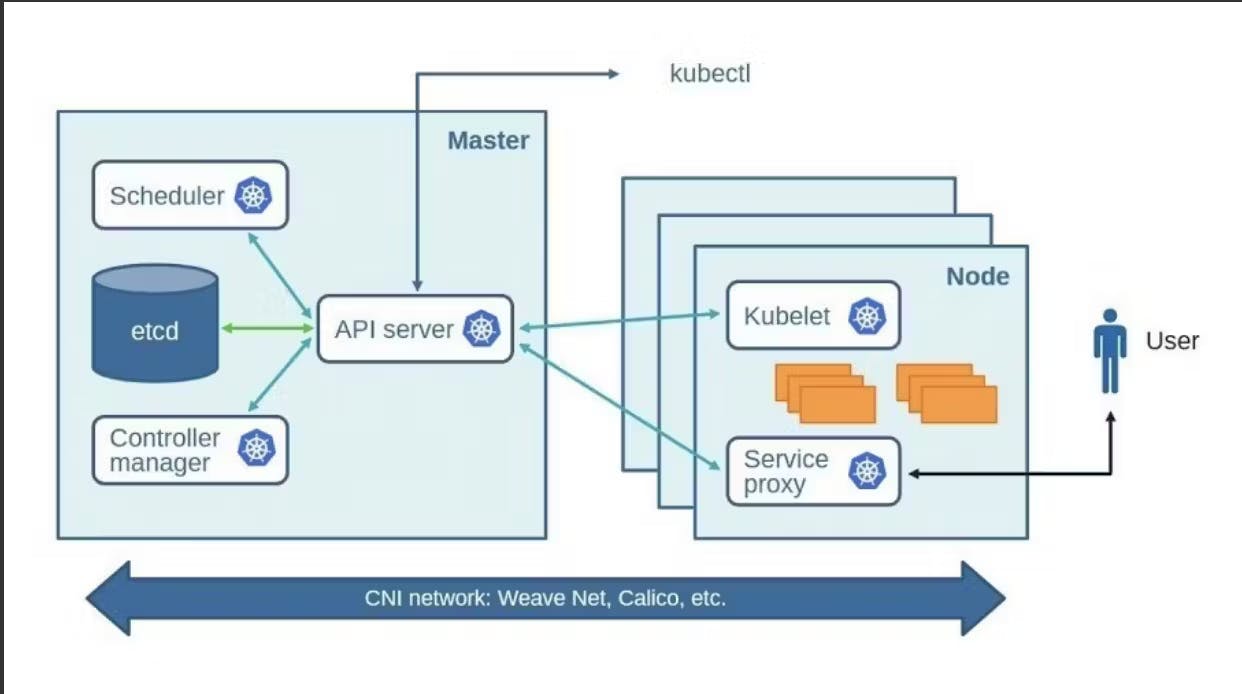

Explain the architecture of Kubernetes

Kubernetes has a modular architecture that consists of several components working together to provide a platform for deploying, scaling, and managing containerized applications. The key components of the Kubernetes architecture are:

Master node: The master node is the control plane of the Kubernetes cluster. It is responsible for managing the overall state of the cluster, scheduling workloads, and monitoring the health of the nodes and applications. The master node typically runs several components, including the API server, etcd, controller manager, and scheduler.

Nodes: Nodes are the worker machines that run the containerized applications. Each node runs a container runtime, such as Docker or containerd, and hosts one or more containers. Nodes are managed by the master node and receive workloads to run from the scheduler.

API server: The API server is the central management point for the Kubernetes cluster. It exposes a RESTful API that allows users and applications to interact with the cluster. The API server handles requests for creating, updating, and deleting Kubernetes objects, such as pods, services, and deployments.

etcd: etcd is a distributed key-value store that is used to store the configuration data for the Kubernetes cluster. It is the primary source of truth for the state of the cluster and is used by the API server, controller manager, and scheduler.

Controller manager: The controller manager is responsible for managing the controllers that monitor and control the state of the Kubernetes objects. Controllers ensure that the desired state of the objects is maintained, and they handle tasks such as scaling applications and performing rolling updates.

Scheduler: The scheduler is responsible for scheduling workloads onto the nodes in the cluster. It selects the best node for each workload based on factors such as resource requirements, node availability, and workload affinity.

Kubelet: The kubelet is an agent that runs on each node in the cluster. It communicates with the API server to receive workloads to run and manages the state of the containers on the node.

Container runtime: The container runtime is the software that runs the containers on the nodes. Kubernetes supports several container runtimes, including Docker, containerd, and CRI-O.

Overall, the Kubernetes architecture provides a flexible and scalable platform for deploying and managing containerized applications. The modular design allows users to customize the platform to meet their specific needs, while the robust set of components provides a reliable and resilient foundation for running mission-critical applications.

What is Control Plane?

The Control Plane manages the worker nodes and the Pods in the cluster. In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.

What is the difference between kubectl and kubelets.

Kubectl and kubelet are both command-line tools that are used in Kubernetes, but they serve different purposes.

Kubectl is the Kubernetes command-line tool that is used for managing and interacting with Kubernetes clusters. It allows users to create, inspect, and modify Kubernetes objects, such as pods, services, and deployments. Kubectl communicates with the Kubernetes API server to send commands and receive status updates about the cluster. Kubectl is typically used by administrators and developers who need to manage the overall state of the cluster and the applications running on it.

Explain the role of the API server.

The API server is the central management point for the Kubernetes cluster. It exposes a RESTful API that allows users and applications to interact with the cluster. The API server handles requests for creating, updating, and deleting Kubernetes objects, such as pods, services, and deployments.